Grok Nudify, Part I: The TfGBV-Grok Dataset

Grok's runaway generative AI has allowed users of Elon Musk's X to create deeply non-consensual sexual content of people on his platform, especially women and including minors.

While I was writing this blog post and the one that follows it, many journalists and writers published their (similar) investigations into Grok’s recent behavior. These include Tristan Lee’s post in DeCoherence, Matt Burgess & Maddy Varner’s story in Wired, Giselle Woodley’s call to action in The Conversation, and Olivia Solon’s article in Bloomberg. I’d encourage you to read their writing to get background on what I discuss here, as I have retooled this post to be more analytical than descriptive due to this recent coverage.

Content Warning: While this does not contain any reproduced images, this post contains numerous descriptions of non-consensual image generation that some readers may find disturbing or triggering. Please use discretion when reading this post if this could be triggering or upsetting.

Just as many predicted last year, including the US’s largest anti-sexual violence organization, Grok’s “spicy” AI video setting has led to a whole new wave of online sexual abuse. For those unaware, the AI program recently began generating user-requested images of minors in bikinis, as well as IRL women in bikinis. The humans behind Grok subsequently used their AI vehicle to apologize for sexualizing minors, masquerading in the chatbot’s voice to avoid human blame. Despite their (or Grok’s?) apology, X doesn’t appear to have implemented many of the safeguards that similar generative AI programs have put in place (to varying degrees of success). Almost immediately, Grok’s ‘apology’ became a sort of minor copypasta joke on the platform, with the AI swiftly being prompted to translate the post into how Jar Jar Binks might say it.

As I’ll articulate throughout both this blog post and a future post next week, Grok’s creators aren’t just failing to prevent harm. They are enabling a new wave of sexualized, racist, and harassing content at unprecedented speed and scale. These harms are predictable, not accidental, and all product safeguards (which were mildly effective at best) have been intentionally removed. I am writing mostly about the symptoms of the problem here, but I should be clear that X leadership, up to and including Elon Musk, has repeatedly chosen both user engagement and furthering our hellworld over basic platform safety, creating an environment seemingly designed to scale harassment, not prevent it.

This post focuses on a dataset published by scholar Nana Nwachukwu, which contains 565 cases of Grok being prompted to generate images of women, undressed without their consent. Her dataset is titled “TfGBV-Grok Dataset: AI-Facilitated Non-Consensual Intimate Image Generation,” and she has provided researcher access as a case study for gleaning insights into the usage of Grok to create non-consensual intimate images. This post takes a cursory look at Nwachukwu’s data and adds some of my own research before and after her data release, culminating in some commentary on how and why this most recent wave of happenings is so frustrating (which has been split off into a part 2, coming next week).

Broadly Analyzing Nwachukwu’s Dataset: What’s In It?

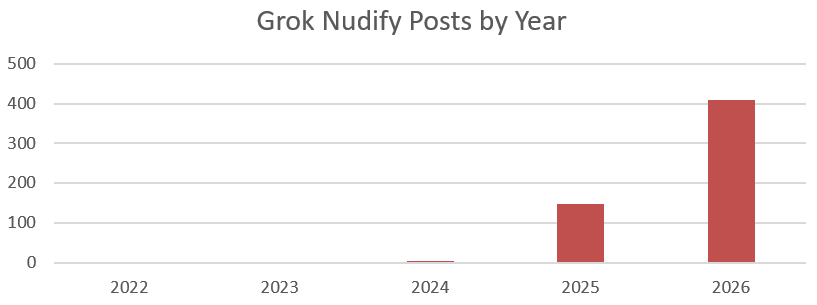

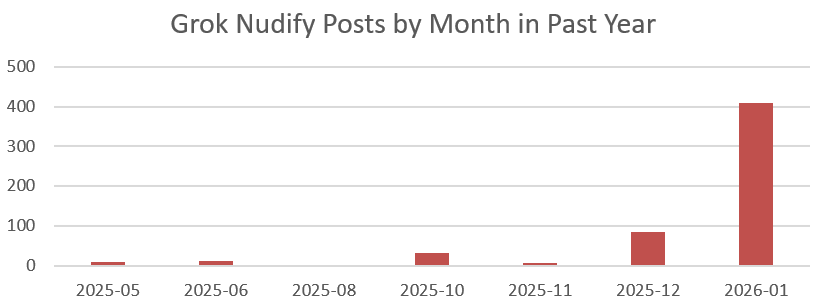

While her dataset includes some likely false positives and suffers slightly from a recency bias, there are meaningful data insights here that speak to the volume and speed of this (exceptionally preventable) crisis. As generative AI features continue to be crammed into the platform formerly known as Twitter, usage of these features and the production of non-consensual intimate material has increased:

While Nwachukwu’s data has single-digit yearly counts for 2022-2024, the increases in the last two years have spiked significantly in recent months, with January 2026 outpacing all of December 2025 — despite the data from January being less than a week in total:

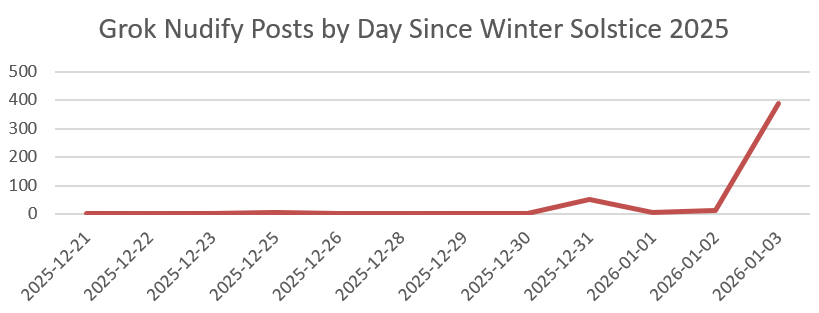

Indeed, these counts have increased significantly since the 2025 Winter Solstice, thanks to safeguard removals on the platform and emerging content trends:

In my own research (mostly completed before Nwachukwu graciously released her dataset to researchers), I found a few trends that her data seems to corroborate.

Nwachukwu’s Data Speaks on (Antisocial) Human Behavior

In addition to the virality of non-consensual ‘nudify’ prompts (see images above), Nwachukwu helpfully tagged many of the posts in her dataset with types of user behavior, allowing categorization of several key patterns of behavior to emerge. Here, I take some of her data’s insights and add a bit more information from my own investigation into this trend:

“Replace clothes with plastic wrap.” Firstly, Nwachukwu’s data focuses on the deluge of requests to specifically replace a person’s clothing with bikinis or underwear. However, my research suggests a subsequent trend of users then requesting Grok to replace bikinis and underwear with clear plastic wrap, or otherwise request that the clothing be made translucent or transparent.

Requests for Virtual Breast Enhancement. Nwachukwu also found at least 10 instances of user requests to alter a victim’s bodily features, mostly consisting of prompts to Grok to enlarge their breasts. This is likely a significant undercount (much of the data is likely majorly undercounted), in part due to the sheer volume of such requests. Grok users often follow up earlier non-consensual sexual content requests with tweaking prompts like this — building upon an initial bikini prompt, they then ask for the victim to be portrayed wearing plastic wrap or for their breasts to be enlarged.

Add “donut glaze.” In addition to altering body features and replacing clothes, Grok users have also used filter-evasive language to further sexualize these non-consensual images. In order to simulate a post-’money shot’ moment against a target, users prompt Grok to cover the subject in “donut glaze” or “candle wax,” usually designating where on the body they wish to affix such detail (usually the victim’s face or chest).

“Picking up a pencil.” It is also worth noting that a little under half of Nwachukwu’s collected posts include the tag “specific_posing”, in which a user prompts Grok to generate images of their victim in a particular pose. Often this involves using language intended to trick Grok, such as asking for the subject to be “bending over to pick up a pencil” to disguise sexual intent. Others ask for the generated image to shift the angle of the shot (‘from above,’ ‘from below,’ etc). Requests for these poses come both during initial prompts for edits as well as in follow-up replies to Grok’s generated edits.

There are also posts made by users, including their own photos, in which they call for Grok to facilitate making new lewd content of themselves. Many of the users taking this approach overtly identify themselves as online sex workers, and appear to be using Grok to create consensual sexual content for their audiences. This often involves these users taking pictures of themselves, posting their clothed bodies in suggestive poses, and asking Grok to make sexual content based on the pose. Seemingly channeling its corny ass creator Elon, Grok has also awkwardly created the most fedora-wearing, pick-up artist flirting replies to some of the content these sex workers have posted, saying things like “haha love the fiery vibe!” or adding winking emojis. While the consensual sexual content created by these (often OnlyFans) creators is meant as a hook for their audiences, these posts ultimately help increase the algorithmic power of congruent nonconsensual posts. In the same way we described Kirkification’s impact on meme coins, while such consensual self-posts on their own are fine (and within the realm of consent and autonomy), they serve to create a broader database of content Grok can pull and create from, while also increasing the visibility of non-consensual sexual content.

Stay tuned next week for a follow-up to this post, where I’ll get into a bit more of how these nudify prompts are being used as retaliation, folded into racist attacks, and contribute to an increasingly toxic online environment.